In the world of digital image processing, the art of image manipulation is essential across a wide range of disciplines, from medical diagnostics to advanced graphic design. Central to this field are intensity transformations, a cornerstone technique in enhancing and correcting images. Through the precise adjustment of pixel values, intensity transformations provide the means to intricately refine brightness, contrast, and the overall aesthetic appeal of images. This post explore the aspects of these transformations, with a particular emphasis on their implementation in python.

The techniques discussed in this post operate directly on the pixels of an image, meaning they work within the spatial domain. In contrast, some image processing techniques are formulated in other domains, these methods work by transforming the input image into another domain, implementing the respective techniques there, and then applying an inverse transformation to return to the spatial domain.

The spatial domain processes covered in this post are based on the expression:

\begin{equation*} g(x,y) = T \left[ f(x,y) \right] \end{equation*}

Where $f(x,y)$ represents the input image, $g(x,y)$ is the output image, and $T$ is the transformation. This transformation is an operator applied to the pixels of the image $f$ and is defined over a neighborhood of the point $(x,y)$. The smallest possible neighborhood is of size $1 \times 1$, in this case, $g$ depends only on the value of $f$ at a single point (pixel), making the transformation $T$ an intensity transformation. We denote $r = f(x,y)$ as the intensity of $f$ and $s = g(x,y)$ as the intensity of $g$, thus, the intensity transformation functions can be expressed in a simplified form:

\begin{equation*} s = T \left( r \right) \end{equation*}

There are many types of intensity transformations, including Binary, Power-Law, Logarithmic, among others. In the following sections, these transformations will be mathematically explained and implemented in Python, for this we need to import the following libraries:

# Importing libraries

import numpy as np

import cv2

import matplotlib.pyplot as plt

- NumPy (numpy): Essential for numerical operations, NumPy offers extensive support for arrays and matrices, which are fundamental in handling image data.

- OpenCV (cv2): A versatile library for image processing tasks. We use OpenCV for reading, processing, and manipulating images.

- Matplotlib (matplotlib.pyplot): Useful for visualizing images and their transformations, Matplotlib help us to display the results of our image processing tasks.

To effectively demonstrate the results of intensity transformations, we implemented the function plot_images, designed to display multiple images in a single figure using subplots. This function will be particularly useful in the following sections of the post to showcase the before-and-after effects of applying various transformations.

# Function to plot multiple images using subplots

def plot_images(images, titles, n_rows, n_cols, figsize):

# Create a figure with specified size

fig, axes = plt.subplots(n_rows, n_cols, figsize=figsize)

# Flatten the axes array for easy indexing

axes = axes.flatten()

# Loop through the images and titles to display them

for i, (img, title) in enumerate(zip(images, titles)):

# Display image in grayscale

axes[i].imshow(img, cmap='gray')

# Set the title for each subplot

axes[i].set_title(title, fontsize = 22)

# Hide axes ticks for a cleaner look

axes[i].axis('off')

# Adjust the layout to prevent overlap

plt.tight_layout()

# Show the compiled figure with all images

plt.show()

This function takes an array of images and their corresponding titles, along with the desired number of rows and columns for the subplots, and the size of the figure. It then creates a figure with the specified layout, displaying each image with its title. The images are shown in grayscale, which is often preferred for intensity transformation demonstrations. The function also ensures a clean presentation by hiding the axes ticks and adjusting the layout to prevent overlap.

Sometimes it's necessary to scale the intensity values of an image to a specific range, particularly when dealing with intensity transformations. This is crucial for ensuring that the transformed pixel values remain within the displayable range of 0 to 255. To facilitate this, we implement an auxiliary function, scaling, which will be used in subsequent sections of the post.

# Function to scale the intensity values of an image to the range 0-255

def scaling(Img, min_f, max_f, min_in = None, max_in = None):

# Determine the current minimum and maximum values of the image

x_min = Img.min()

x_max = Img.max()

# Override the min and max values if specified

if min_in != None:

x_min = min_in

x_max = max_in

# Scale the image to the new range [min_f, max_f]

Img_scaled = min_f + ((max_f - min_f) / (x_max - x_min)) * (Img - x_min)

return Img_scaled

In this function: Img is the input image whose pixel values need scaling, min_f and max_f define the new range to which the image's intensity values will be scaled, min_in and max_in are optional parameters that allow us to specify a custom range of the original image's intensity values. If not provided, the function uses the actual minimum and maximum values of Img. The function calculates the scaled image Img_scaled by linearly transforming the original pixel values to fit within the new specified range.

Gamma (Power-Law) Transformations

The power-law transformation, also known as the Gamma transformation, is a technique that employs a power-law function to adjust the pixel values of an image. This transformation is versatile, allowing for the emphasis on specific intensity ranges and enhancing particular details in an image.

The Gamma Transformation is mathematically represented as:

\begin{equation*} s = cr^\gamma \end{equation*}

Here, $c$ and $\gamma$ are positive constant. Typically, the $c$ value is omitted because, when displaying an image in Python, there is an internal calibration process that automatically maps the lowest and highest pixel values to black and white, respectively. The parameter $\gamma$ specifies the shape of the curve that maps the intensity values $r$ to produce $s$. Below is a graphical representation of the curves generated using different values of $\gamma$:

As illustrated in the previous figure, if $\gamma$ is less than $1$, the mapping is weighted toward brighter (higher) output values. Conversely, if $\gamma$ is greater than $1$, the mapping is weighted toward darker (lower) output values. When $\gamma$ equals $1$ the transformation becomes a linear mapping, maintaining the original intensity distribution.

Python Implementation

After discussing the theory behind Gamma transformations, we are ready for the practical implementation. We implement the gamma_transformation function, which applies the Gamma transformation to an image, allowing us to adjust the image's intensity values based on the Gamma curve.

# Function to apply the Gamma Transformation

def gamma_transformation(Img, gamma = 1, c = 1):

# Apply the Gamma transformation formula

s = c*(Img**gamma)

# Scale the transformed image to the range of 0-255 and convert to uint8

Img_transformed = np.array(scaling(s, 0, 255), dtype = np.uint8)

return Img_transformed

The function first applies the Gamma transformation formula to the input image Img based on the values of the parameters gamma and c. It then uses the previously defined scaling function to scale the transformed pixel values back to the range of 0-255. This step is crucial to ensure that the transformed image can be properly displayed and processed. The transformed image is then converted to an 8-bit unsigned integer format (np.uint8), which is the standard format in OpenCV.

Now that we have defined the gamma_transformation function, we can apply it to a real image to observe the effects of this transformation. Below is the Python code used to load an image, apply the Gamma transformation, and display the original and transformed images:

# Load an image in grayscale

Im1 = cv2.imread('/content/Image_1.png', cv2.IMREAD_GRAYSCALE)

# Apply the Gamma transformation with gamma = 4.0

Im1_transformed = gamma_transformation(Im1, gamma = 4.0)

# Prepare images for display

images = [Im1, Im1_transformed]

titles = ['Original Image', 'Gamma Transformation']

# Use the plot_images function to display the original and transformed images

plot_images(images, titles, 1, 2, (15, 7))

We first load an image in grayscale using OpenCV's imread function. The image is read from a specified path (/content/Image_1.png). We then apply the Gamma transformation to this image using our gamma_transformation function with a Gamma value of $4.0$. Finally, we use the previously defined plot_images function to display the original and transformed images. The resultant images are shown below:

We can observe that the original image possesses significant brightness. To emphasize the finer details, we decided the gamma value of $\gamma = 4$, resulting in an output image with darker intensity values. This transformation allows us to discern more details that were previously less visible due to the high brightness levels.

Log Transformations

The logarithmic transformation employs logarithmic functions to modify the pixel values of an image. This technique effectively redistributes the pixel values, accentuating details in darker areas while compressing the details in brighter areas. This characteristic makes it particularly effective in scenarios where it's necessary to enhance the visibility of features in darker regions of an image while maintaining the overall balance of the image.

The general form of the Log Transformation is:

\begin{equation*} s = c\log(1 + r) \end{equation*}

Where $c$ is a constant and $log$ represents the Natural Logarithm (inverse function of the exponential function), it is assumed that $r \geq 0$. Below is a graphical representation of the Log function curve, along with some curves corresponding to Gamma Transformations:

From this illustration, we can observe that the behavior of the Log function is similar to that of Gamma Transformations when $\gamma < 1$. This means that a transformation akin to the Log function can be achieved by selecting an appropriate value in a Gamma Transformation.

Python Implementation

Following our discussion on logarithmic transformation, we now turn to its practical implementation. We implement the log_transformation function, it applies the log transformation to an image:

# Function to apply the Log Transformation

def log_transformation(Img, c = 1):

# Apply the Log transformation formula

s = c*np.log(1 + np.array(Img, dtype = np.uint16))

# Scale the transformed image to the range of 0-255 and convert to uint8

Img_transformed = np.array(scaling(s, 0, 255), dtype = np.uint8)

return Img_transformed

The function first converts the input image to a higher data type (np.uint16) to accommodate the increased dynamic range after applying the logarithmic function. It then applies the Log Transformation formula. After the transformation, the function uses the previously defined scaling function to scale the transformed pixel values back to the range of 0-255. The transformed image is then converted to an 8-bit unsigned integer format (np.uint8).

After exploring the concept of logarithmic transformations, we now apply this technique to a real image using the following code:

# Load an image in grayscale

Im2 = cv2.imread('/content/Image_2.png', cv2.IMREAD_GRAYSCALE)

# Apply the Log transformation

Im2_transformed = log_transformation(Im2)

# Prepare images for display

images = [Im2, Im2_transformed]

titles = ['Original Image', 'Logarithmic Transformation']

# Use the plot_images function to display the original and transformed images

plot_images(images, titles, 1, 2, (11, 7))

We load an image in grayscale from a specified path (/content/Image_2.png). We then apply the Log Transformation to this image using our log_transformation function. This transformation is expected to enhance the visibility of features in darker regions of the image. Finally, we use the plot_images function to display the original and transformed images side by side. The results are shown below:

The original image contains significant details obscured by darkness. To address this issue, we implemented the Log Transformation. The resulting output image reveals enhanced details in the darkest sections, which were previously less visible.

To Be Continued...

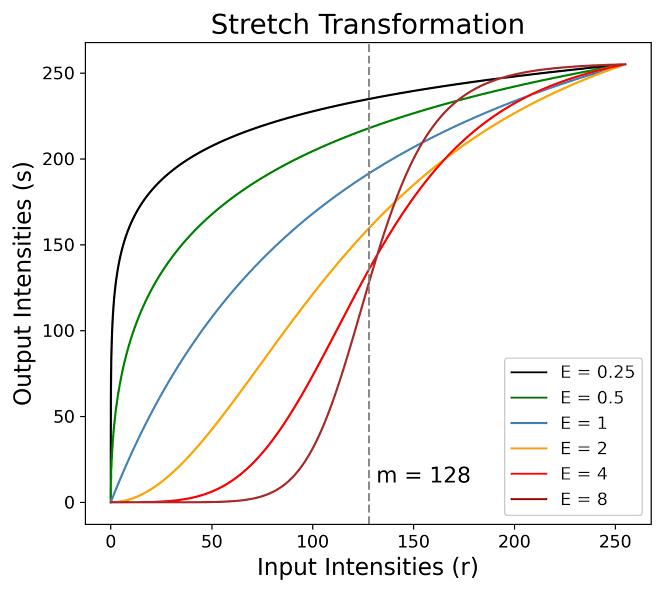

In the next post, we'll continue our exploration with the Contrast-Stretching transformation, and both the Threshold and Adaptive Threshold transformations. Stay tuned for a more in-depth look at these techniques. For continuity, make sure to read the second part of this series here.